GeForce RTX 5070 Ti on Linux (Debian 13)

I got a GeForce RTX 5070 Ti GPU for Christmas. I want to do some AI stuff with it. Maybe I’ll have something about that to post soon. But getting it to work on my system was really a chore! I’m running Debian 13 with a ROG STRIX B550-F motherboard (not the wifi version).

After I put it in and tried to boot, I saw Grub’s selection screen, but almost immediately my monitor went black, and within 10 or 20 seconds it lost its connection entirely.

By adding an extra echo line after initrb in grub I could see that at least that part was running. But it was dying soon after, and I had no messages to diagnose the problem. Doing something like journalctl -b -1 gave nothing (or rather info from the boot prior).

Adding modeset stuff to grub didn’t help. Adding noacpi or pci=noacpi didn’t help. Turning off ACPI altogether with acpi=off let me boot though. I didn’t have working graphics, but I could Ctrl-Alt-F3 to a console and run commands.

Even after installing nVidia’s driver, I didn’t have a working display. I followed their instructions for the open-source driver, which gave me version 590.48.01. (Their old closed-source driver doesn’t support newer cards like the 5070 Ti.) This reddit thread helped too. Basically I did this:

apt-get install linux-headers-amd64

apt-get install linux-headers-$(uname -r)

wget https://developer.download.nvidia.com/compute/cuda/repos/debian13/x86_64/cuda-keyring_1.1-1_all.deb

dpkg -i cuda-keyring_1.1-1_all.deb

apt-get update

apt-get install nvidia-openThen I could run nvidia-smi, and lsmod | awk 'NR==1||/nvid/' showed nvidia_uvm, nvidia, and drm. Also I could see from mokutil --sb-state that I was running with SecureBoot disabled, which Google AI thinks is a good idea.

But my display problems continued. When I ran startx by hand I could see a segfault in /var/log/Xorg.0.log. Later I got this to go away (perhaps it was adding grub commands to blacklist nouveau sooner?), and then X would start, and with pstree I could see that xfce was running and even restoring commands from my session, like terminals and firefox. But the display wouldn’t switch: I was still looking at the console, with some messages printed by X.

In the boot log I saw errors about not finding the DRM device, and before that there were messages about IRQ problems for the PCI device. There was a recommendation to use pci=biosirq, but that didn’t help. (I think that hint is for really old systems anyway.) I figured probably acpi=off was messing things up.

I tried upgrading my motherboard BIOS. It was a few years out-of-date. I installed the latest version (3635, published 2025-11-06 with build date 2025-09-30). After unzipping, I renamed the file to RSB550FG.CAP. Then I formatted an 8GB USB drive with mkfs.vfat --mbr=yes -I -F 32 /dev/disk/by-id/usb-SanDisk_Cruzer_Fit_4C532000030816101033-0\:0. Then I copied the file to the root of the drive, ran sync, and yanked the USB stick. With case power on but the computer off, I stuck the USB into the Flash BIOS port in the back and held down the Flash BIOS button for a few seconds. When I let go, the USB activity light started blinking. If I shaded the motherboard panel I could see that the Flash BIOS light was blinking too. So I let it run for 5 or 10 minutes, and when I checked the blinking had stopped. I booted, and the BIOS still worked!

Somehow this made grub much slower. Every keystroke took a second or two to register, and I easily lost letters. I tried some BIOS tweaks, like turning off Legacy USB support. But the only thing that worked was changing GRUB_GFXMODE to 1024x768. Actually 640x480 was even better, but all the lines wrapped and it was unreadable. I’m pretty sure a lower resolution shouldn’t be needed. I think flashing the BIOS wiped all my old settings, so maybe there is some better way to restore grub’s performance, but I haven’t found it yet.

Anyway, the BIOS upgrade didn’t solve my problems. Allowing ACPI still caused a failure right after starting the kernel. I suspected that a proper fix to acpi=off would do the trick. I found this article about using the 5070 Ti on Linux, and I started changing BIOS settings. The issue turned out to be “Above 4G Encoding” (under “Advanced > PCI Subsystem Settings”). For me it was disabled. When I enabled it, suddenly everything worked.

That’s it. I haven’t tried installing cuda-toolkit yet, but that’s next. I’ve had an AMD card for years. A year or two ago I got ROCm compiled, after much struggle, but pytorch would just segfault. I’m excited to play around with AI a bit now.

Anyway, “Above 4G Encoding” was definitely not in Google AI’s many, many recommendations as I searched for solutions, so perhaps this blog entry will go into its next training and help someone out.

Solving bison shift/reduce conflicts in Postgres

I had to fix some shift/reduce conflicts in the Postgres bison grammar recently.

I’ve never done this before, so it was a learning experience. Maybe my story will help some other new Postgres contributor—or anyone struggling with this technology that is central to computer science but for many day-to-day programmers seldom-used.

Back in 2018 I read lex & yacc by Doug Brown, John R. Levine, and Tony Mason (second edition published in 1992). Levine’s 2009 flex & bison would have been a more practical choice, but I liked getting some history too. Re-reading some parts of that book was very helpful. So were the bison manual and some StackOverflow questions. Now I’m working through The Dragon Book, and that would have made a great resource too. It’s easy to write some bison without going that deep, but if you get stuck it can be frustrating.

I’ve been adding syntax from SQL:2011 for application-time updates and deletes. If you have a PERIOD or range column named valid_at, you can say UPDATE t FOR PORTION OF valid_at FROM '2024-01-01' TO '2024-02-01' SET foo = bar. (For more details you can watch my talk.) The FOR PORTION OF bounds don’t have to be just literals. You could also say, for example, FROM current_time TO current_time + INTERVAL '1' HOUR.

Actually I picked that example on purpose. Did you know that intervals support this syntax: INTERVAL '1:02:03' HOUR TO MINUTE? That means: Interpret the string as hours, and preserve the precision down to the minute. By default if you ask for 1:02:03 HOUR you get just an hour. But TO MINUTE means you get 1 hour and 2 minutes. (You still lose the seconds.)

So how does an LR(1) parser deal with FOR PORTION OF valid_at FROM current_time + INTERVAL '1' HOUR TO MINUTE? When we consider the TO, does it belong with the interval, or does it start the closing bound of the FOR PORTION OF? This is a shift/reduce conflict. Bison can’t look ahead further than one token to guess what is correct—and I’m not sure it would help even if it could.

When the next token is able to complete some piece of the grammar, called a “rule”, the parser “reduces”: it consumes those tokens and lets you run a custom “action” attached to your rule. Otherwise, bison shifts the token onto a stack of not-yet-reduced tokens, so that it can reduce a rule in the future. But bison needs to decide each time it sees a token whether to reduce or to shift.

Here is the rule for FOR PORTION OF:

for_portion_of_clause:

FOR PORTION OF ColId FROM a_expr TO a_expr

{

ForPortionOfClause *n = makeNode(ForPortionOfClause);

n->range_name = $4;

n->location = @4;

n->target_start = $6;

n->target_end = $8;

$$ = n;

}

;The first line is the name of the rule, so we can use it in higher-level contexts: the UPDATE statement (and also DELETE). The second line has the inputs we need to match to complete the rule. Some of them are “terminals”: keywords, identifiers, operators, literals, punctuation, etc.—in this case FOR, PORTION, OF, FROM, TO. Some are “non-terminal”: further rules. Below all that is a block of C code: the action that gets run when we reduce. We call the name the “left side” of the rule, and the inputs the “right side” or “body”. Rules are also sometimes called “productions”. The lex & yacc book doesn’t use that terminology, but the Dragon Book does, and so does the Postgres source code.

So each bound is an a_expr. The a_expr rule is a complicated production with just about anything you can do in Postgres: a literal, a variable, a function call, an operator, a subquery, lots of weird SQL keywords, etc. Many contexts forbid some of these things, e.g. you can’t refer to a column in a DEFAULT expression or a partition bound—but that is enforced during analysis, not by the grammar.

To speak more precisely, when a non-terminal can match inputs in more than one way (like a_expr), we should call each alternative a rule or production. But in bison you commonly write the name once then separate each body with a pipe (|), so all the rules share one name. There is not one a_expr rule, but many: 68 by my count. But such terminological precision is rarely needed.

Take our example, FOR PORTION OF valid_at FROM current_time + INTERVAL '1' HOUR • TO MINUTE. I’ve added a dot to represent bison’s “cursor”. It is considering what to do with the TO. We could reduce the a_expr right now, leaving the TO to become part of the FOR PORTION OF. Or we could shift the TO so that it eventually gets reduced as part of the a_expr.

Actually it’s not about reducing the a_expr, but reducing one of its many sub-rules, in this case the interval. An a_expr can be a c_expr (among other things), and a c_expr can be an AexprConst (among other things), and an AexprConst can be a ConstInterval Sconst opt_interval (among other things), and the opt_interval is the problem, because it can optionally have a TO. Here is that rule:

opt_interval:

YEAR_P

{ $$ = list_make1(makeIntConst(INTERVAL_MASK(YEAR), @1)); }

| MONTH_P

{ $$ = list_make1(makeIntConst(INTERVAL_MASK(MONTH), @1)); }

| DAY_P

{ $$ = list_make1(makeIntConst(INTERVAL_MASK(DAY), @1)); }

| HOUR_P

{ $$ = list_make1(makeIntConst(INTERVAL_MASK(HOUR), @1)); }

| MINUTE_P

{ $$ = list_make1(makeIntConst(INTERVAL_MASK(MINUTE), @1)); }

| interval_second

{ $$ = $1; }

| YEAR_P TO MONTH_P

{ ... }

| DAY_P TO HOUR_P

{ ... }

| DAY_P TO MINUTE_P

{ ... }

| DAY_P TO interval_second

{ ... }

| HOUR_P TO MINUTE_P

{ ... }

| HOUR_P TO interval_second

{ ... }

| MINUTE_P TO interval_second

{ ... }

| /*EMPTY*/

{ $$ = NIL; }

;(I’ve omitted most of the actions, since actions don’t affect bison’s choices.) The opt_interval rule is what bison is trying to reduce.

When you have a shift/reduce conflict, make gives you an error like this:

/usr/bin/bison -d -o gram.c gram.y

gram.y: conflicts: 4 shift/reduce

gram.y: expected 0 shift/reduce conflicts

make[2]: *** [gram.c] Error 1

make[1]: *** [parser/gram.h] Error 2

make: *** [submake-generated-headers] Error 2That is not much to go on.

By the way, do you see the expected 0? A shift/reduce conflict doesn’t have to be fatal. Bison will default to shift. But this is a bit sketchy. It means you could accidentally write an ambiguous grammar that causes trouble later. So bison lets you declare how many conflicts you expect, and it only fails if it finds a different count. I like that for Postgres the expected conflict count is zero. For MariaDB it is 62.

Anyway, we have four shift/reduce conflicts. Now what? Let’s ask Bison where they are. It can take a -v/--verbose option to generate a “report file”. Since Postgres’s grammar lives in gram.y, the report file is gram.output. (Modern versions offer more control with -r/--report and --report-file, but macOS only supports -v.) We aren’t running bison directly, but we can control things like this:

make BISONFLAGS=-vThat gives us a file with 5.5 million lines, but right at the top we see:

State 1454 conflicts: 1 shift/reduce

State 1455 conflicts: 1 shift/reduce

State 1456 conflicts: 1 shift/reduce

State 1459 conflicts: 1 shift/reduceThen if we /^state 1454 we see this:

state 1454

2000 opt_interval: DAY_P .

2005 | DAY_P . TO HOUR_P

2006 | DAY_P . TO MINUTE_P

2007 | DAY_P . TO interval_second

TO shift, and go to state 2670

TO [reduce using rule 2000 (opt_interval)]

$default reduce using rule 2000 (opt_interval)So in this state, bison has four candidate rules it could eventually reduce, numbered 2000, 2005, 2006, 2007. Below that are possible valid tokens and what to do for each one. We see TO twice, which is the problem. The square brackets highlight the conflict: they mark a transition that will never happen. (The $default line means if the next token is not a TO, we can reduce and leave that token for some higher-level rule to match.) So this is how we know one half of the problem is opt_interval. The other half is for_portion_of_clause. Bison doesn’t tell us that, but (1) we just added it to a previously-working grammar (2) we can see that TO is the issue, and that’s where we match a TO.

This is one of the four shift/reduce conflicts. The other three are also from opt_interval, caused by YEAR_P TO MONTH_P, HOUR_P TO {MINUTE_P,interval_second}, and MINUTE_P TO interval_second. Essentially it’s all one conflict, but we can hit the TO after DAY, HOUR, YEAR, or MINUTE, so that’s four different states.

We can use “precedence” to resolve such ambiguities. It’s just like elementary arithmetic: multiplication has higher precedence than addition. It is stickier. We do it first. But what does that mean in bison? Bison compares the precedence of the non-terminal rule it could reduce (opt_interval) vs the precedence of the token it could shift (TO). Rules don’t really have precedence themselves, but they get the precedence of their final terminal token.

So in state 1454, if we give DAY_P a different precedence than TO, bison will know whether to reduce (rule 2000), or shift (and eventually reduce rule 2005, 2006, or 2007). If DAY_P is higher, we’ll reduce. If TO is higher, we’ll shift.

Should we shift or reduce? The only answer is to shift. If we reduce by default, then users can never say INTERVAL '1' DAY TO HOUR (even in a completely different context). No amount of parens will make bison do otherwise. But if we shift, then this is a syntax error: FOR PORTION OF valid_at FROM '2013-03-01'::timestamp + INTERVAL '1' HOUR TO '2014-01-01' (because after shifting the TO bison is still trying to reduce opt_interval), but this fixes it: FOR PORTION OF valid_at FROM ('2013-03-01'::timestamp + INTERVAL '1' HOUR) TO '2014-01-01'. Users can get what they want by adding parens.

So to shift, we give TO a higher precedence than YEAR_P, DAY_P, HOUR_P, and MINUTE_P. By default a token has no precedence, but bison lets you make a list of declarations where lower lines have higher precedence. So for a long time my patch added this:

%nonassoc YEAR_P DAY_P HOUR_P MINUTE_P

%nonassoc TO(Actually I had MONTH_P in there too, but that isn’t needed because you can’t have MONTH TO ....)

But this is frowned upon. There is a comment right above my change that gave me a guilty conscience for at least a year, maybe a few:

/*

* Sometimes it is necessary to assign precedence to keywords that are not

* really part of the operator hierarchy, in order to resolve grammar

* ambiguities. It's best to avoid doing so whenever possible, because such

* assignments have global effect and may hide ambiguities besides the one

* you intended to solve. (Attaching a precedence to a single rule with

* %prec is far safer and should be preferred.) If you must give precedence

* to a new keyword, try very hard to give it the same precedence as IDENT.

* If the keyword has IDENT's precedence then it clearly acts the same as

* non-keywords and other similar keywords, thus reducing the risk of

* unexpected precedence effects.

*/I knew I had to fix this before my patch would get accepted. Those two lines had to go.

What is the %prec approach suggested by the comment? I said above that a rule’s precedence comes from its last terminal token. But you can override a rule’s precedence by putting %prec token_name after the right side. For example a_expr has this rule:

| a_expr AT TIME ZONE a_expr %prec AT

{ ... }We’re saying we should reduce this rule with the precedence of AT.

I tried all kinds of %prec placements that didn’t work. My mental model of bison’s process was too vague. The reason I’m writing this story is really to record the details and thought process that finally gave me the solution.

For example, putting %prec on for_portion_of_clause doesn’t do any good, because the conflict is lower down than that, inside opt_interval. That was counter-intuitive, because I knew that adding for_portion_of_clause was what caused the problem. It’s what offers bison a way to reduce early and still have a place to use the TO. But despite for_portion_of_clause exerting influence, at the moment of decision we are in the middle of a different rule. It’s action-at-a-distance.

Another breakthrough was realizing that the comparison is between a rule (to reduce) and a token (to shift). Within opt_interval I kept trying to give low precedence to the rules without TO and high precedence to the rules with it. But the comparison isn’t between two rules. It’s between a rule and a token. The token is TO itself. There isn’t any way to give precedence to a token with %prec. That only modifies a rule. If TO has an undefined precedence, there will always be a conflict. So I did have to declare a precedence for TO, but following the comment above I could give it the same precedence as IDENT:

%nonassoc IDENT PARTITION RANGE ROWS GROUPS PRECEDING FOLLOWING CUBE ROLLUP

SET KEYS OBJECT_P SCALAR TO VALUE_P WITH WITHOUT PATHThen the conflicting opt_interval rules needed a lower precedence, to prevent reducing early. A low-precedence keyword we use a lot is IS, so I did this:

opt_interval:

YEAR_P %prec IS

{ ... }

| DAY_P %prec IS

{ ... }

| HOUR_P %prec IS

{ ... }

| MINUTE_P %prec IS

{ ... }Now we’ll shift the TO and follow those rules that include it.

Finally I had a solution!

It’s worth considering another approach. We can also enforce precedence with the structure of our rules, without declaring an explicit higher/lower precedence for terminals. For example for simple arithmetic we could do this (from the Dragon Book, p. 49–50):

expr: expr + term

| expr - term

| term

term: term * factor

| term / factor

| factor

factor: digit

| '(' expr ')'For n levels of precedence, we need n+1 different rules. But I think this approach gets unwieldy quickly. And anyway was I going to rewrite the Postgres grammar to do this?

Postgres actually does this a bit already though. We’ve seen a_expr and c_expr. Of course there is also b_expr. b_expr is a more limited set of rules than a_expr, and c_expr is everything they have in common.

We use b_expr to solve some shift/reduce conflicts. For example a column’s DEFAULT value can only take a b_expr, because a NOT would be a shift/reduce conflict: is it a NOT NULL constraint on the column, or is it part of the DEFAULT expression, e.g. NOT LIKE? One rule that b_expr accepts is '(' a_expr ')', so even in contexts like DEFAULT, you can get whatever you want by wrapping your text in parentheses.

So could I have saved myself a lot of trouble and made FOR PORTION OF take FROM b_expr TO b_expr instead? No, because the problem was inside c_expr, which is shared by both rules.

I probably could have invented a d_expr, but that would have been a lot of work, only to produce a more tangled grammar that I expect no reviewer would have accepted.

So that’s the story of how I fixed my four shift-reduce conflicts.

But just when you think you’ve killed the zombie, he rises from the dead. Right around the same time, I realized my grammar was wrong. In SQL, you can give your table an alias when you UPDATE or DELETE. It can use AS or not: UPDATE tablename [[AS] t] and DELETE FROM tablename [[AS] t]. I was putting FOR PORTION OF after the alias, but according to SQL:2011 it comes before. I tried moving it, and I got . . . 30 shift/reduce conflicts!

These looked really hairy: the problem was that AS is optional and the alias can be nearly anything. It can’t be a reserved keyword (unless you quote it), but many keywords are not reserved (per the standard), so there’s ambiguity there. Allowing a_expr, which can be nearly anything, followed by an optional alias, which can be nearly anything, is bad news. I really thought I was in trouble.

Could I just ignore the standard? I don’t think that would be acceptable, not in this matter. But it was tempting enough that I checked what MariaDB and IBM DB2 were doing. Somehow they were making it work. I should figure it out too.

I think I took a walk, or maybe I slept on it, but I realized that we already have the same problem with column aliases when you SELECT. Each selected column is an a_expr, and their aliases don’t require AS. What was Postgres doing to make that work?

I found this rule for SELECTing:

target_el: a_expr AS ColLabel { ...}

| a_expr BareColLabel { ... }

| a_expr { ... }

| '*' { ... }

;It turns out that ColLabel allows anything (even reserved keywords!), but BareColLabel is more restricted.

So I could do something similar: when there is an AS, permit everything, but otherwise only permit tokens that are conflict-free. If fact to keep backward-compatibility, I could leave the old grammar rule for UPDATE and DELETE in place (each had only one), and only get more restrictive when FOR PORTION OF is present. Maybe reviewers will ask me to change things, but at the moment my solution looks like this:

opt_alias:

AS ColId { ... }

| BareColLabel { ... }

| /* empty */ %prec UMINUS { $$ = NULL; }

;

UpdateStmt: opt_with_clause UPDATE relation_expr_opt_alias

SET set_clause_list

from_clause

where_or_current_clause

returning_clause

{ ... }

| opt_with_clause UPDATE relation_expr

for_portion_of_clause opt_alias

SET set_clause_list

from_clause

where_or_current_clause

returning_clause

{ ... }

;I’m not sure I like using BareColLabel for non-column aliases, but the existing relation_expr_opt_alias uses ColId, so maybe it’s okay.

The %prec is necessary to resolve a conflict with USING, which is permitted by BareColLabel, and also allowed in DELETE FROM ... USING. If I added a separate list for bare table labels, we could leave out USING and not use %prec here, but I don’t think maintaining another keyword list would be popular.

That’s it! I’m happy that at 47 I can still work out the errors in my mental model of something and correct them. Hopefully by writing this down I won’t have to do it more than once. :-)

Postgres REPLICA IDENTITY

Both logical decoding and logical replication use a table’s REPLICA IDENTITY. This is a way to say which row was changed by an UPDATE or DELETE. In other word it identifies the “old” row.

Logical decoding will use the replica identity to say which row was changed, if available, but if not then the information is simply omitted. No big deal.

In logical replication, the subscriber looks for the replica identity with each change, and it uses it to know which row to remove, either because it was replaced or because it was deleted. So you can always replicate inserts and truncates, but you can only replicate updates and deletes if you have an appropriate replica identity. In fact even on the publication side, Postgres will forbid changes without an appropriate replica identity, as we will see.

Normally a table has DEFAULT for its replica identity. This means it will use the table’s primary key (if present). You can’t set the replica identity when you create the table, but you can change it with ALTER TABLE. Besides DEFAULT, it can be USING INDEX <index> or FULL or NOTHING. The replica identity is stored in pg_class.relreplident.

I had a lot of questions about how each of these works. Mostly I wanted to know when things failed: creating/altering the table/publication, making the change, or receiving it. All my tests were done on Postgres 17 using the REL_17_0 tag after doing make world && make install-world. I wanted to replicate from one database to another in the same cluster, but that requires some (slightly) more complicated commands. To keep the SQL simple, I ran separate clusters, one with a database named sender, the other with a database named receiver. The sending cluster runs on 5432 to keep the receiver’s connection strings simpler.

On the sender I set wal_level like this (on a Mac):

sed -i '' -e '/wal_level/a\

wal_level=logical' ~/local/pgsql/data/postgresql.confOn Linux I think this would be just:

sed -i '/wal_level/awal_level=logical' ~/local/pgsql/data/postgresql.confYou can find the SQL for each test in this github repo.

NOTHING

Let’s start with NOTHING. This means there is no information about the old row. If we’re looking for failures, this is nice and simple.

Q1: What happens if you create a publication for it?

This is allowed:

sender=# create table t (a text);

CREATE TABLE

sender=# alter table t replica identity nothing;

ALTER TABLE

sender=# create publication p for table t;

CREATE PUBLICATIONQ2: What happens if you add it to an existing publication?

This is allowed too:

sender=# create table t (a text);

CREATE TABLE

sender=# alter table t replica identity nothing;

ALTER TABLE

sender=# create publication p;

CREATE PUBLICATION

sender=# alter publication p add table t;

ALTER PUBLICATIONQ3: What happens if you create a FOR ALL TABLES publication?

And this is allowed:

sender=# create table t (a text);

CREATE TABLE

sender=# alter table t replica identity nothing;

ALTER TABLE

sender=# create publication p for all tables;

CREATE PUBLICATIONQ4: What happens if you set a table to NOTHING when it already belongs to a publication?

It’s allowed:

sender=# create table t (a text);

CREATE TABLE

sender=# create publication p for table t;

CREATE PUBLICATION

sender=# alter table t replica identity nothing;

ALTER TABLEQ5: What happens if you set a table to NOTHING when you already have a FOR ALL TABLES publication?

It’s allowed:

sender=# create table t (a text);

CREATE TABLE

sender=# create publication p for all tables;

CREATE PUBLICATION

sender=# alter table t replica identity nothing;

ALTER TABLEQ6: What happens if you change an insert-only publication to an update publication, and it has a NOTHING table?

It’s allowed:

sender=# create table t (a text);

CREATE TABLE

sender=# alter table t replica identity nothing;

ALTER TABLE

sender=# create publication p for table t with (publish = insert);

CREATE PUBLICATION

sender=# alter publication p set (publish = 'insert,update,delete,truncate');

ALTER PUBLICATIONQ7: What happens if you change an insert-only FOR ALL TABLES publication to an update publication, and the database has a NOTHING table?

It’s allowed:

sender=# create table t (a text);

CREATE TABLE

sender=# alter table t replica identity nothing;

ALTER TABLE

sender=# create publication p for all tables with (publish = insert);

CREATE PUBLICATION

sender=# alter publication p set (publish = 'insert,update,delete,truncate');

ALTER PUBLICATIONQ8: What happens if you update a NOTHING table that is in a publication?

It’s not allowed!

This fails on the publisher side, even if there is no subscription:

sender=# create table t (a text);

CREATE TABLE

sender=# alter table t replica identity nothing;

ALTER TABLE

sender=# create publication p for table t;

CREATE PUBLICATION

sender=# insert into t values ('a');

INSERT 0 1

sender=# update t set a = 'b';

ERROR: cannot update table "t" because it does not have a replica identity and publishes updates

HINT: To enable updating the table, set REPLICA IDENTITY using ALTER TABLE.Q9: What happens if you delete from a NOTHING table that is in a publication?

This fails the same way:

sender=# create table t (a text);

CREATE TABLE

sender=# alter table t replica identity nothing;

ALTER TABLE

sender=# create publication p for table t;

CREATE PUBLICATION

sender=# insert into t values ('a');

INSERT 0 1

sender=# update t set a = 'b';

ERROR: cannot delete from table "t" because it does not have a replica identity and publishes deletes

HINT: To enable deleting from the table, set REPLICA IDENTITY using ALTER TABLE.So to summarize, Postgres doesn’t validate publications up front, but only when you try to send through them a NOTHING update/delete.

FULL

FULL means all columns are combined to determine uniqueness. Of course that might still not be unique. So what happens if you update one of them but not the other?

Q1: What happens if you have two identical records and you delete one?

On the publisher:

sender=# create table t (a text);

CREATE TABLE

sender=# alter table t replica identity full;

ALTER TABLE

sender=# create publication p for table t;

CREATE PUBLICATIONOn the subscriber:

receiver=# create table t (a text);

CREATE TABLE

receiver=# create subscription s connection 'dbname=sender' publication p;

NOTICE: created replication slot "s" on publisher

CREATE SUBSCRIPTIONBack on the publisher:

sender=# insert into t values ('a');

INSERT 0 1

sender=# insert into t values ('a');

INSERT 0 1

sender=# select ctid, a from t;

ctid | a

-------+---

(0,1) | a

(0,2) | a

(2 rows)

sender=# delete from t where ctid = '(0,1)';

DELETE 1And the receiver sees:

receiver=# select * from t;

a

---

a

(1 row)So we didn’t lose both rows! I guess that’s because we are replicating a delete of one row. Which one we delete doesn’t matter, but we’ll only delete one.

Q1: What happens if you have two identical records and you delete both?

On the publisher:

sender=# create table t (a text);

CREATE TABLE

sender=# alter table t replica identity full;

ALTER TABLE

sender=# create publication p for table t;

CREATE PUBLICATIONOn the subscriber:

receiver=# create table t (a text);

CREATE TABLE

receiver=# create subscription s connection 'dbname=sender' publication p;

NOTICE: created replication slot "s" on publisher

CREATE SUBSCRIPTIONBack on the publisher:

sender=# insert into t values ('a');

INSERT 0 1

sender=# insert into t values ('a');

INSERT 0 1

sender=# delete from t;

DELETE 2And the receiver sees:

receiver=# select * from t;

a

---

(0 rows)So both rows disappeared. That makes sense, because the receiver got two messages to delete a row like ('a').

Q3: What happens if you have two identical records and you update one?

I assume updating one of two identical rows will work the same, but let’s check:

sender=# create table t (a text);

CREATE TABLE

sender=# alter table t replica identity full;

ALTER TABLE

sender=# create publication p for table t;

CREATE PUBLICATIONreceiver=# create table t (a text);

CREATE TABLE

receiver=# create subscription s connection 'dbname=sender' publication p;

NOTICE: created replication slot "s" on publisher

CREATE SUBSCRIPTIONsender=# insert into t values ('a');

INSERT 0 1

sender=# insert into t values ('a');

INSERT 0 1

sender=# select ctid, a from t;

ctid | a

-------+---

(0,1) | a

(0,2) | a

(2 rows)sender=# update t set a = 'b' where ctid = '(0,1)';

UPDATE 1receiver=# select * from t;

a

---

a

b

(2 rows)Yep!

So with FULL there are no errors on the publisher side nor on the subscriber side. The disadvantage is that everything is slower: logical decoding sends more data, and the subscriber must compare all the columns for equality.

I wonder if the subscriber will still use an index to apply changes if there is one? I haven’t tested that yet, but if I do I will put an update here.

USING INDEX <index>

This lets us choose a UNIQUE index, as long as none of its keys are nullable. Assuming the subscriber has the same uniqueness, it can quickly locate which rows to change. Here is the happy path:

sender=# create table t (a text not null unique);

CREATE TABLE

sender=# alter table t replica identity using index t_a_key;

ALTER TABLE

sender=# create publication p for table t;

CREATE PUBLICATIONIn that case, pg_class.relreplident is set to i, and pg_index.indisreplident is set to true for that index. You can see which index is used with \d:

sender=# \d t

Table "public.t"

Column | Type | Collation | Nullable | Default

--------+------+-----------+----------+---------

a | text | | not null |

Indexes:

"t_a_key" UNIQUE CONSTRAINT, btree (a) REPLICA IDENTITYBut what can we do to mess it up?

Q1: What happens if you use a not-unique index?

sender=# create table t (a text);

CREATE TABLE

sender=# create index idx_t_a on t (a);

CREATE INDEX

sender=# alter table t replica identity using index idx_t_a;

ERROR: cannot use non-unique index "idx_t_a" as replica identityWe can’t even set the REPLICA IDENTITY.

Q2: What happens if you drop the index?

sender=# create table t (a text not null);

CREATE TABLE

sender=# create unique index idx_t_a on t (a);

CREATE INDEX

sender=# alter table t replica identity using index idx_t_a;

ALTER TABLE

sender=# drop index idx_t_a;

DROP INDEX

sender=# create publication p for table t;

CREATE PUBLICATIONWell you were able to drop it! The table’s relreplident is still i, but now there is no index with a true pg_index.indisreplident.

Let’s see what happens if we try to use it. On the subscriber:

receiver=# create table t (a text);

CREATE TABLE

receiver=# create subscription s connection 'dbname=sender' publication p;

NOTICE: created replication slot "s" on publisher

CREATE SUBSCRIPTIONBack on the sender:

sender=# insert into t values ('a');

INSERT 0 1

sender=# update t set a = 'b';

ERROR: cannot update table "t" because it does not have a replica identity and publishes updatesOops! We were able to insert, but the update failed. Even on the sender, we weren’t able to make the change.

I’m dreaming of some other trickery with ALTER INDEX, but nothing that seems problematic is supported, like changing a unique index to a non-unique one.

So like NOTHING, failures happen when we update the table. But in addition there is validation before setting pg_index.indisreplident. If the index is not appropriate, we’ll fail there.

DEFAULT

The DEFAULT setting means to use the table’s primary key, if it has one. So if the table has a primary key, you’re all good. But what if it doesn’t? Is it the same as NOTHING?

Well we are allowed to set things up:

sender=# create table t (a text);

CREATE TABLE

sender=# create publication p for table t;

CREATE PUBLICATIONAnd on the receiver:

receiver=# create table t (a text);

CREATE TABLE

receiver=# create subscription s connection 'dbname=sender' publication p;

NOTICE: created replication slot "s" on publisher

CREATE SUBSCRIPTIONNow we make some changes:

sender=# insert into t values ('a');

INSERT 0 1

sender=# update t set a = 'b';

ERROR: cannot update table "t" because it does not have a replica identity and publishes updatesSo again we can insert things, but not send updates. We fail before we even send over the change. In this test we didn’t even need to run anything on the receiver to cause the failure.

Since the failure is so late, there is no difference between starting with a primary key and dropping it partway through. Likewise with other ideas we tried for NOTHING, like adding the publication before the table or using FOR ALL TABLES. Also changing an insert-only publication to an update publication is permitted, but then the next update command fails.

Still let’s just try one simple case:

Q2: What happens if you drop the primary key from a table in a publication?

sender=# create table t (a text primary key);

CREATE TABLE

sender=# create publication p for table t;

CREATE PUBLICATION

sender=# alter table t drop constraint t_pkey;

ALTER TABLE

sender=# insert into t values ('a');

INSERT 0 1

sender=# update t set a = 'b';

ERROR: cannot update table "t" because it does not have a replica identity and publishes updates

HINT: To enable updating the table, set REPLICA IDENTITY using ALTER TABLE.No problem dropping the key, but then we get a failure making an update. Also this test shows that no subscription is required.

Conclusion

So in all four cases, the validation happens when you update/delete a row and try to push the change through a PUBLICATION. Your change gets rolled back, so there is no inconsistency. I’m happy about this, because it lowers the risk of tricking Postgres into publishing changes it shouldn’t. Also it makes things easier for replicating temporal tables. (Of course that was my ulterior motive!)

Postgres Logical Replication

Logical decoding is different from logical replication. Logical replication is built on logical decoding.

Logical Decoding

Logical decoding exports the changes happening in your database. They are streamed through a replication slot. The slot keeps track of how far you’ve read, so that it doesn’t skip or repeat messages. (Btw how is it not “at least once” delivery?) Basically you are getting a stream of WAL records. But whereas a physical replication slot gives you the exact binary of the WAL as it gets written, a logical replication slot includes an output plugin that encodes the WAL in a (hopefully) more accessible way.

You can write output plugins yourself by implementing various callbacks to handle different kinds of database activity. The only essential callbacks are LogicalDecodeBeginCB, LogicalDecodeChangeCB, and LogicalDecodeCommitCB, but there are many optional ones. Some of those let you implement two-phase commit (see e.g. Kleppmann 352–359).

You can also add an output writer, but I’m not sure how it differs from a plugin. Somehow it only requires implementing three callbacks instead of many. I don’t see any way to “install” your writer or attach it to a slot. I’ll come back to this some other day.

The most popular output plugin is wal2json. When you read from the replication slot, you get each WAL record as a JSON object. Postgres also has a built-in test_decoding output plugin (the default), which gives you the details as plain text.

Postgres comes with a tool called pg_recvlogical you can use to read from a replication slot. It can also create and drop slots. For example:

pg_recvlogical --create-slot --if-not-exists --slot s --dbname paul

pg_recvlogical --start --slot s --dbname paul -f -If you let that run then go into psql and issue some inserts/updates/deletes, you will see the messages getting sent through the slot.

paul=# create table t (a text primary key);

CREATE TABLE

paul=# insert into t values ('a'), ('b');

INSERT 0 2

paul=# update t set a = 'aa' where a = 'a';

UPDATE 1

paul=# delete from t where a = 'b';

DELETE 1Our pg_recvlogical command prints:

BEGIN 19715

COMMIT 19715

BEGIN 19716

table public.t: INSERT: a[text]:'a'

table public.t: INSERT: a[text]:'b'

COMMIT 19716

BEGIN 19717

table public.t: UPDATE: old-key: a[text]:'a' new-tuple: a[text]:'aa'

COMMIT 19717

BEGIN 19718

table public.t: DELETE: a[text]:'b'

COMMIT 19718If you want JSON instead, you can create the slot with -P wal2json (after installing it).

The tricky part here is identifying which row changed for updates and deletes. Because t had a primary key, Postgres used it, because it should uniquely identify a row on the other end. But you can do other things too, based on the table’s REPLICA IDENTITY. You can set this to DEFAULT (i.e. using the primary key if present), NOTHING, USING INDEX <index>, or FULL.

NOTHING is the same as DEFAULT with no primary key: there is no identifying information available.

USING INDEX takes a unique index with no nullable parts, and uses that.

FULL uses all the attributes of the row. It works for any table, but it’s less performant.

For more details on REPLICA IDENTITY you can read my article here.

Let’s drop the table, create it without a primary key, and run the same commands again:

paul=# create table t (a text);

CREATE TABLE

paul=# insert into t values ('a'), ('b');

INSERT 0 2

paul=# update t set a = 'aa' where a = 'a';

UPDATE 1

paul=# delete from t where a = 'b';

DELETE 1Now the logical decoding output doesn’t have any old-row identifiers:

BEGIN 19720

COMMIT 19720

BEGIN 19721

table public.t: INSERT: a[text]:'a'

table public.t: INSERT: a[text]:'b'

COMMIT 19721

BEGIN 19722

table public.t: UPDATE: a[text]:'aa'

COMMIT 19722

BEGIN 19723

table public.t: DELETE: (no-tuple-data)

COMMIT 19723Missing a REPLICA IDENTITY is no problem for logical decoding. For logical replication, it will raise an error when you try to update or delete.

Clarification of “Streaming”

In the Postgres docs, the word “streaming” can be misleading. Often it means physical replication (originally the only kind of streaming replication we had), in contrast to logical—but not always.

Sometimes “streaming replication” constrasts with “log shipping”. It means the standby opens a connection to the primary and constantly pulls data via the streaming replication protocol. In log shipping, the primary has an archive_command to copy WAL files where the standby can read them. (In fact you usually combine these methods.) That said, this page is at the same time equating streaming replication with physical. You could set up a standby with logical replication, but that’s not what it’s describing.

In another place, “streaming” means the streaming replication protocol in contrast to SQL commands.

Perhaps most often, “streaming replication” means “physical replication” in contrast to logical. For instance this note about logical replication slots should perhaps s/streaming/physical/:

PostgreSQL also has streaming replication slots (see Section 26.2.5), but they are used somewhat differently there.

Likewise the three opening paragraphs of the page for “Replication” configuration seem to constrast streaming replication to logical replication.

So just watch out when you’re reading! More precise language would contast “physical” with “logical”, and be clear that both are “streaming.” I’m hopeful from that mailing list thread so far that we might start moving the docs in that direction.

Logical Replication

Logical replication is built on top of logical decoding and replication slots. But instead of working at such a low level, it replicates changes from one Postgres table to another (usually in another cluster). The publisher side uses a built-in output plugin named pgoutput. To set it up, you use CREATE PUBLICATION on the sender and CREATE SUBSCRIPTION on the receiver. Like this:

sender=# CREATE PUBLICATION p FOR TABLE t1, t2;

. . .

receiver=# CREATE SUBSCRIPTION s CONNECTION 'host=yonder dbname=thisnthat' PUBLICATION p;But first you’ll need to have tables in the receiving database named t1 and t2, with at least enough columns to match their sources.

There are lots of options. For the publication, you can create it FOR ALL TABLES or FOR TABLES IN SCHEMA s1, s2. You can publish a subset of event types (insert, update, delete, truncate). You can publish a subset of each table’s columns. You can do special things with partitions. You can have a WHERE clause to publish only certain rows (assuming the publication is for a single table).

The subscription side has lots of options too. See the docs above for details.

Once you have a publication and subscription created, you will see an entry in the sender’s pg_stat_replication:

sender=# select * from pg_stat_replication \gx

-[ RECORD 1 ]----+------------------------------

pid | 44344

usesysid | 10

usename | paul

application_name | s

client_addr | NULL

client_hostname | NULL

client_port | -1

backend_start | 2024-10-13 11:37:18.361684-05

backend_xmin | NULL

state | streaming

sent_lsn | 0/11DE0AB8

write_lsn | 0/11DE0AB8

flush_lsn | 0/11DE0AB8

replay_lsn | 0/11DE0AB8

write_lag | NULL

flush_lag | NULL

replay_lag | NULL

sync_priority | 0

sync_state | async

reply_time | 2024-10-13 11:37:48.419264-05Unlike physical replication, logical replication does not transfer DDL changes.

Synchronous Replication

Both logical decoding and logical replication can be synchronous. This means we can configure the primary to report successful commits only after hearing from the standby(s) that they were successful. (You can also do this with physical replication.) To enable this, first set synchronous_standby_names to a comma-separated list of names you will wait for. Then set synchronous_commit to remote_apply, on, remote_write, or local. (The default is on.)

remote_apply is the most thorough: the standbys must flush the commit record to disk and apply it (so that other connections can see it). on also requires flushing to disk (e.g. WAL), but it needn’t be applied. remote_write requires standbys to perform the write to disk, but they need not have confirmation from the OS that it’s been flushed. And local will not wait for standbys. (This is sort of pointless if you put things in synchronous_standby_names, but maybe it lets you disable synchronous replication temporarily without erasing that other setting.)

The names to put in synchronous_standby_names should match the connection’s application_name, one of the parameters available when opening any connection. You can set it in the connection string if you like. Otherwise it defaults to the subscription name. (For physical replication, standbys omitting this from their connection string default to their cluster_name or failing that walsender.) You can see the application_name in pg_stat_replication above matches the subscription name.

If you have more than one standby listed as synchronous, the primary will wait on confirmation from all of them (by default: you can do more nuanced things with synchronous_standby_names if you like).

If you are using synchronous logical replication, this is an important warning:

A synchronous replica receiving changes via logical decoding will work in the scope of a single database. Since, in contrast to that,

synchronous_standby_namescurrently is server wide, this means this technique will not work properly if more than one database is actively used.

According to commit 3cb828dbe2 the problem is a potential deadlock from locking catalog tables. The commit and the docs have the specific lock sequences that are dangerous, and they don’t seem hard to avoid.

My first impression on reading the note was a different scenario though. Consider: you have two databases, both replicated with logical replication, but each to a different standby. You make a change in one database, and now you’re waiting for both standbys to confirm. But one never got the change so will never answer.

But in fact Postgres is smarter than that and only waits for the standbys that are relevant. I tested these scenarios:

- One database in the sender cluster replicates to a database in the receiver cluster, and I update a table in a different database in the sender cluster. (I am “actively using” it.)

- Two databases in the sender cluster each replicate to the same database in the receiver cluster.

- Two databases in the sender cluster each replicate to databases in different clusters.

Logical Replication combined with Physical Replication Failover

If your primary cluster has both a physical replication standby and logical replication subscribers, and then you fail over to the standby, you need a way to point the logical subscribers at the newly-promoted cluster (i.e. the former standby). You want to do that without losing data.

If (before the failure) you set sync_replication_slots to true on the physical standby, it will maintain the same slots the primary has, including keeping track of how far each has been read. That way when your logical subscribers connect to the new primary, they can resume where they left off.

It is also a good idea to use synchronized_standby_slots, to make sure the logical subscribers don’t get ahead of the physical standby.

Benchmarking Temporal Foreign Keys

Way back in Februrary Peter Eisentraut asked me if I’d tested the performance of my patch to add temporal foreign keys to Postgres.

Have you checked that the generated queries can use indexes and have suitable performance? Do you have example execution plans maybe?

Here is a report on the tests I made. I gave a talk about this last month at pdxpug, but this blog post will be easier to access, and I’ll focus just on the foreign key results.

Method

As far as I know there are no published benchmark schemas or workflows for temporal data. Since the tables require start/end columns, you can’t use an existing benchmark like TCP-H. The tables built in to pgbench are no use either. I’m not even sure where to find a public dataset. The closest is something called “Incumben”, mentioned in the “Temporal Alignment” paper. They authors say it has 85,857 entries for job assignments across 49,195 employees at the University of Arizona—but I can’t find any trace of it online. (I’ll update here if I hear back from them about it.)

So I built a temporal benchmark of my own using CMU’s Benchbase framework. (Thanks to Mark Wong and Grant Holly for that recommendation!) It also uses employees and positions, both temporal tables with a valid_at column (a daterange). Each position has a reference to an employee, checked by a temporal foreign key. Primary and foreign keys have GiST indexes combining the integer part and the range part. Here is the DDL:

CREATE TABLE employees (

id int GENERATED BY DEFAULT AS IDENTITY NOT NULL,

valid_at daterange NOT NULL,

name text NOT NULL,

salary int NOT NULL,

PRIMARY KEY (id, valid_at WITHOUT OVERLAPS)

);

CREATE TABLE positions (

id int GENERATED BY DEFAULT AS IDENTITY NOT NULL,

valid_at daterange NOT NULL,

name text NOT NULL,

employee_id int NOT NULL,

PRIMARY KEY (id, valid_at WITHOUT OVERLAPS),

FOREIGN KEY (employee_id, PERIOD valid_at) REFERENCES employees (id, PERIOD valid_at)

);

CREATE INDEX idx_positions_employee_id ON positions USING gist (employee_id, valid_at);Naturally you can’t run that unless you’ve compiled Postgres with the temporal patches above.

The benchmark has procedures that exercise foreign keys (update/delete employee, insert/update position). There are other procedures too: selecting one row, selecting many rows, inner join, outer join, semijoin, antijoin. I plan to add aggregates and set operations (union/except/intersect), as well as better queries for sequenced vs non-sequenced semantics. But right now the foreign key procedures are better developed than anything else. I also plan to change the SQL from rangetypes to standard SQL:2011 PERIODs, at least for non-Postgres RDBMSes. I’ll write more about all that later; this post is about foreign keys.

range_agg Implementation

Temporal foreign keys in Postgres are implemented like this:

SELECT 1

FROM (

SELECT pkperiodatt AS r

FROM [ONLY] pktable x

WHERE pkatt1 = $1 [AND ...]

AND pkperiodatt && $n

FOR KEY SHARE OF x

) x1

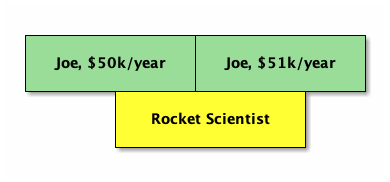

HAVING $n <@ range_agg(x1.r)This is very similar to non-temporal checks. The main difference is we use range_agg to aggregate referenced records, since it may require their combination to satisfy the reference. For example if the employee got a raise in the middle of the position, neither employee record alone covers the position’s valid time:

In our query, the HAVING checks that the “sum” of the employee times covers the position time.

A subquery is not logically required, but Postgres currently doesn’t allow FOR KEY SHARE in a query with aggregations.

I like this query because it works not just for rangetypes, but multiranges too. In fact we could easily support arbitrary types, as long as the user provides an opclass with an appropriate support function (similar to the stratnum support function introduced for temporal primary keys). We would call that function in place of range_agg. But how does it perform?

EXISTS implementation

I compared this query with two others. The original implementation for temporal foreign keys appears on pages 128–129 of Developing Time-Oriented Database Applications in SQL by Richard Snodgrass. I call this the “EXISTS implementation”. Here is the SQL I used:

SELECT 1

-- There was a PK when the FK started:

WHERE EXISTS

SELECT 1

FROM [ONLY] <pktable>

WHERE pkatt1 = $1 [AND ...]

AND COALESCE(lower(pkperiodatt), '-Infinity')

<= COALESCE(lower($n), '-Infinity')

AND COALESCE(lower($n), '-Infinity')

< COALESCE(upper(pkperiodatt), 'Infinity')

)

-- There was a PK when the FK ended:

AND EXISTS (

SELECT 1

FROM [ONLY] <pktable>

WHERE pkatt1 = $1 [AND ...]

AND COALESCE(lower(pkperiodatt), '-Infinity')

< COALESCE(upper($n), 'Infinity')

AND COALESCE(upper($n), 'Infinity')

<= COALESCE(upper(pkperiodatt), 'Infinity')

)

-- There are no gaps in the PK:

-- (i.e. there is no PK that ends early,

-- unless a matching PK record starts right away)

AND NOT EXISTS (

SELECT 1

FROM [ONLY] <pktable> AS pk1

WHERE pkatt1 = $1 [AND ...]

AND COALESCE(lower($n), '-Infinity')

< COALESCE(upper(pkperiodatt), 'Infinity')

AND COALESCE(upper(pkperiodatt), 'Infinity')

< COALESCE(upper($n), 'Infinity')

AND NOT EXISTS (

SELECT 1

FROM [ONLY] <pktable> AS pk2

WHERE pk1.pkatt1 = pk2.pkatt1 [AND ...]

-- but skip pk1.pkperiodatt && pk2.pkperiodatt

AND COALESCE(lower(pk2.pkperiodatt), '-Infinity')

<= COALESCE(upper(pk1.pkperiodatt), 'Infinity')

COALESCE(upper(pk1.pkperiodatt), 'Infinity')

< COALESCE(upper(pk2.pkperiodatt), 'Infinity')

)

);The main idea here is that we check three things: (1) the referencing row is covered in the beginning, (2) it is covered in the end, (3) in between, the referenced row(s) have no gaps.

I made a few changes to the original:

- It can’t be a

CHECKconstraint, since it references other rows. - There is less nesting. The original is wrapped in a big

NOT EXISTSand looks for bad rows. Essentially it says “there are no invalid records.” In Postgres we check one referencing row at a time, and we give a result if it is valid. You could say we look for good rows. This also requires inverting the middle-layerEXISTSandNOT EXISTSpredicates, and changingORs toANDs. I’ve often run into trouble withOR, so this is probably fortunate. - We have to “unwrap” the start/end times since they are stored in a rangetype. I could have used rangetype operators here, but I wanted to keep the adaptation as straightforward as possible, and the previous changes felt like a lot already. Unwrapping requires dealing with unbounded ranges, so I’m using plus/minus

Infinityas a sentinel. This is not perfectly accurate, since in ranges a null bound is “further out” than a plus/minusInfinity. (Tryselect '{(,)}'::datemultirange - '{(-Infinity,Infinity)}'::datemultirange.) But again, solving that was taking me too far from the original, and it’s fine for a benchmark. - We need to lock the rows with

FOR KEY SHAREin the same way as above. We need to do this in each branch, since they may use different rows.

Given the complexity, I didn’t expect this query to perform very well.

lag implementation

Finally there is an implementation in Vik Fearing’s periods extension. This is a lot like the EXISTS implementation, except to check for gaps it uses the lag window function. Here is the SQL I tested:

SELECT 1

FROM (

SELECT uk.uk_start_value,

uk.uk_end_value,

NULLIF(LAG(uk.uk_end_value) OVER

(ORDER BY uk.uk_start_value), uk.uk_start_value) AS x

FROM (

SELECT coalesce(lower(x.pkperiodatt), '-Infinity') AS uk_start_value,

coalesce(upper(x.pkperiodatt), 'Infinity') AS uk_end_value

FROM pktable AS x

WHERE pkatt1 = $1 [AND ...]

AND uk.pkperiodatt && $n

FOR KEY SHARE OF x

) AS uk

) AS uk

WHERE uk.uk_start_value < upper($n)

AND uk.uk_end_value >= lower($n)

HAVING MIN(uk.uk_start_value) <= lower($n)

AND MAX(uk.uk_end_value) >= upper($n)

AND array_agg(uk.x) FILTER (WHERE uk.x IS NOT NULL) IS NULLAgain I had to make some adaptations to the original

- There is less nesting, for similar reasons as before.

- We unwrap the ranges, much like the

EXISTSversion. Again there is anInfinity-vs-null discrepancy, but it is harder to deal with since the query uses null entries in thelagresult to indicate gaps. - I couldn’t resist using

&&instead of<=and>=in the most-nested part to find relevant rows. The change was sufficiently obvious, and if it makes a difference it should speed things up, so it makes the comparison a bit more fair.

I made a new branch rooted in my valid-time branch, and added an extra commit to switch between each implementation with a compile-tag flag. By default we still use range_agg, but instead you can say ‑DRI_TEMPORAL_IMPL_LAG or ‑DRI_TEMPORAL_IMPL_EXISTS. I installed each implementation in a separate cluster, listening on port 5460, 5461, and 5462 respectively.

I also included procedures in Benchbase to simply run the above queries as SELECTs. Since we are doing quite focused microbenchmarking here, I thought that would be less noisy than doing the same DML for each implementation. It also means we can run a mix of all three implementations together: they use the same cluster, and if there is any noise on the machine it affects them all. If you look at my temporal benchmark code, you’ll see the same SQL but adapted for the employees/positions tables.

Hypothesis

Here is the query plan for the range_agg implementation:

Aggregate

Filter: ('[2020-10-10,2020-12-12)'::daterange <@ range_agg(x1.r))

-> Subquery Scan on x1

-> LockRows

-> Index Scan using employees_pkey on employees x

Index Cond: ((id = 500) AND (valid_at && '[2020-10-10,2020-12-12)'::daterange))It uses the index, and it all seems like what we’d want. It is not an Index Only Scan, but that’s because we lock the rows. Non-temporal foreign keys are the same way. This should perform pretty well.

Here is the query plan for the EXISTS implementation:

Result

One-Time Filter: ((InitPlan 1).col1 AND (InitPlan 2).col1 AND (NOT (InitPlan 4).col1))

InitPlan 1

-> LockRows

-> Index Scan using employees_pkey on employees x

Index Cond: ((id = 500) AND (valid_at && '[2020-10-10,2020-12-12)'::daterange))

Filter: ((COALESCE(lower(valid_at), '-infinity'::date) <= '2020-10-10'::date) AND ('2020-10-10'::date < COALESCE(upper(valid_at), 'infinity'::date)))

InitPlan 2

-> LockRows

-> Index Scan using employees_pkey on employees x_1

Index Cond: ((id = 500) AND (valid_at && '[2020-10-10,2020-12-12)'::daterange))

Filter: ((COALESCE(lower(valid_at), '-infinity'::date) < '2020-12-12'::date) AND ('2020-12-12'::date <= COALESCE(upper(valid_at), 'infinity'::date)))

InitPlan 4

-> LockRows

-> Index Scan using employees_pkey on employees pk1

Index Cond: ((id = 500) AND (valid_at && '[2020-10-10,2020-12-12)'::daterange))

Filter: (('2020-10-10'::date < COALESCE(upper(valid_at), 'infinity'::date)) AND (COALESCE(upper(valid_at), 'infinity'::date) < '2020-12-12'::date) AND (NOT EXISTS(SubPlan 3)))

SubPlan 3

-> LockRows

-> Index Scan using employees_pkey on employees pk2

Index Cond: (id = pk1.id)

Filter: ((COALESCE(lower(valid_at), '-infinity'::date) <= COALESCE(upper(pk1.valid_at), 'infinity'::date)) AND (COALESCE(upper(pk1.valid_at), 'infinity'::date) < COALESCE(upper(valid_at), 'infinity'::date)))That looks like a lot of work!

And here is the plan for the lag implementation:

Aggregate

Filter: ((array_agg(uk.x) FILTER (WHERE (uk.x IS NOT NULL)) IS NULL) AND (min(uk.uk_start_value) <= '2020-10-10'::date) AND (max(uk.uk_end_value) >= '2020-12-12'::date))

-> Subquery Scan on uk

Filter: ((uk.uk_start_value < '2020-12-12'::date) AND (uk.uk_end_value >= '2020-10-10'::date))

-> WindowAgg

-> Sort

Sort Key: uk_1.uk_start_value

-> Subquery Scan on uk_1

-> LockRows

-> Index Scan using employees_pkey on employees x

Index Cond: ((id = 500) AND (valid_at && '[2020-10-10,2020-12-12)'::daterange))This looks a lot like the range_agg version. We still use our index. There is an extra Sort step, but internally the range_agg function must do much the same thing (if not something worse). Maybe the biggest difference (though a slight one) is aggregating twice.

So I expect range_agg to perform the best, with lag a close second, and EXISTS far behind.

One exception may be a single referencing row that spans many referenced rows. If range_agg is O(n2), it should fall behind as the referenced rows increase.

Results

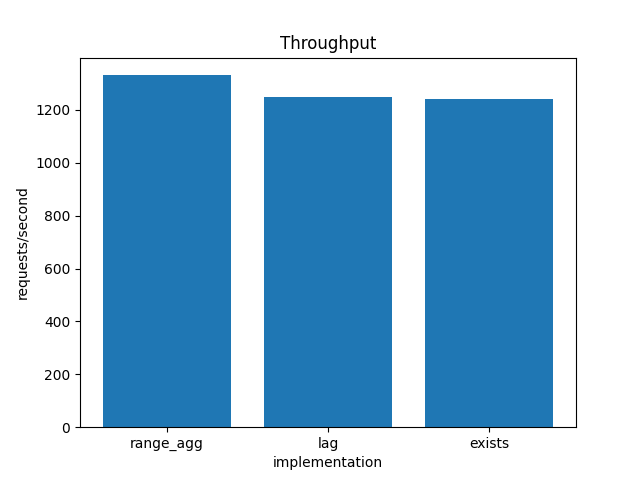

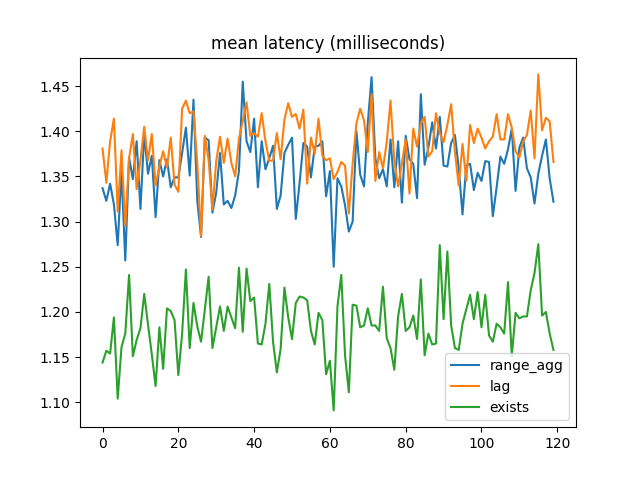

I started by running a quick test on my laptop, an M2 Macbook Air with 16 GB of RAM. I tested the DML commands on each cluster, one after another. Then I checked the benchbase summary file for the throughput. The results were what I expected:

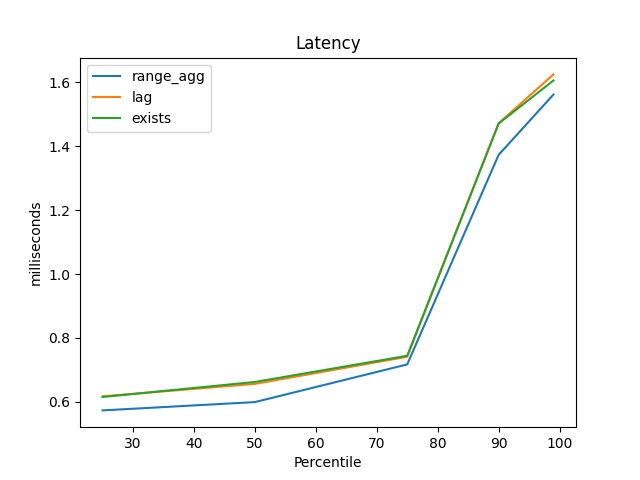

Similarly, range_agg had the best latency at the 25th, 50th, 75th, 90th, and 99th percentiles:

But the difference throughout is pretty small, and at the time my Benchbase procedures used a lot of synchronized blocks to ensure there were few foreign key failures, and that kind of locking seemed like it might throw off the results. I needed to do more than this casual check.

I ran all the future benchmarks on my personal desktop, running Ubuntu 22.04.

It was hard to make things reproducible, but I wrote various scripts as I went, and I tried to capture results. The repo for all that is here. My pdxpug talk above contains some reflections about improving my benchmark methodology.

I also removed the synchronized blocks and dealt with foreign key failures a better way (by categorizing them as errors but not raising an exception).

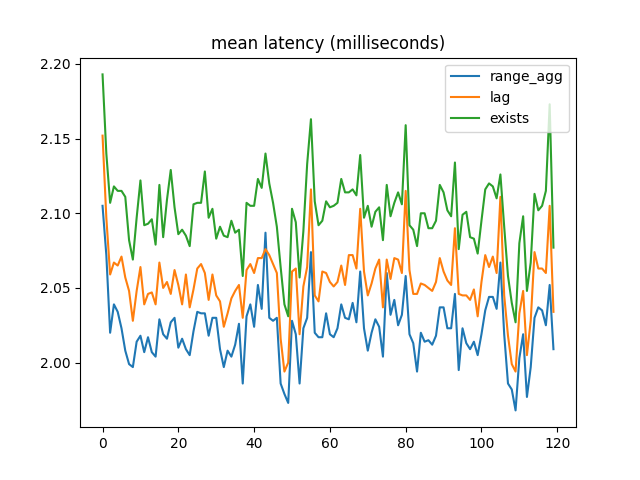

The first more careful tests used the direct SELECT statements.

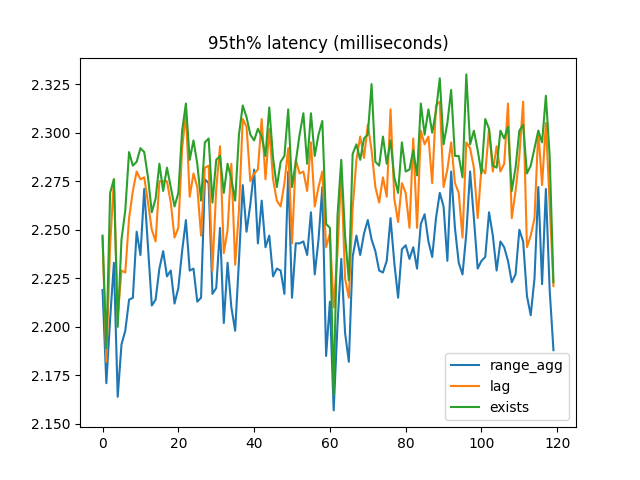

Again, the 95th percentile latency was what I expected:

But the winner for mean latency was EXISTS!:

A clue was in the Benchbase output showing successful transactions vs errors. (The Noop procedure is so can make the proportions 33/33/33/1 instead of 33/33/34.):

Completed Transactions:

com.oltpbenchmark.benchmarks.temporal.procedures.CheckForeignKeyRangeAgg/01 [72064] ********************************************************************************

com.oltpbenchmark.benchmarks.temporal.procedures.CheckForeignKeyLag/02 [71479] *******************************************************************************

com.oltpbenchmark.benchmarks.temporal.procedures.CheckForeignKeyExists/03 [71529] *******************************************************************************

com.oltpbenchmark.benchmarks.temporal.procedures.Noop/04 [ 4585] *****

Aborted Transactions:

<EMPTY>

Rejected Transactions (Server Retry):

<EMPTY>

Rejected Transactions (Retry Different):

<EMPTY>

Unexpected SQL Errors:

com.oltpbenchmark.benchmarks.temporal.procedures.CheckForeignKeyRangeAgg/01 [80861] ********************************************************************************

com.oltpbenchmark.benchmarks.temporal.procedures.CheckForeignKeyLag/02 [80764] *******************************************************************************

com.oltpbenchmark.benchmarks.temporal.procedures.CheckForeignKeyExists/03 [80478] *******************************************************************************More than half of the transactions were an invalid reference.

And if we put one of those into EXPLAIN ANALYZE, we see that most of the plan was never executed:

Result (actual time=0.034..0.035 rows=0 loops=1)

One-Time Filter: ((InitPlan 1).col1 AND (InitPlan 2).col1 AND (NOT (InitPlan 4).col1))

InitPlan 1

-> LockRows (actual time=0.033..0.033 rows=0 loops=1)

-> Index Scan using employees_pkey on employees x (actual time=0.033..0.033 rows=0 loops=1)

Index Cond: ((id = 5999) AND (valid_at && '[2020-10-10,2020-12-12)'::daterange))

Filter: ((COALESCE(lower(valid_at), '-infinity'::date) <= '2020-10-10'::date) AND ('2020-10-10'::date < COALESCE(upper(valid_at), 'infinity'::date)))

InitPlan 2

-> LockRows (never executed)

-> Index Scan using employees_pkey on employees x_1 (never executed)

Index Cond: ((id = 5999) AND (valid_at && '[2020-10-10,2020-12-12)'::daterange))

Filter: ((COALESCE(lower(valid_at), '-infinity'::date) < '2020-12-12'::date) AND ('2020-12-12'::date <= COALESCE(upper(valid_at), 'infinity'::date)))

InitPlan 4

-> LockRows (never executed)

-> Index Scan using employees_pkey on employees pk1 (never executed)

Index Cond: ((id = 5999) AND (valid_at && '[2020-10-10,2020-12-12)'::daterange))

Filter: (('2020-10-10'::date < COALESCE(upper(valid_at), 'infinity'::date)) AND (COALESCE(upper(valid_at), 'infinity'::date) < '2020-12-12'::date) AND (NOT EXISTS(SubPlan 3)))

SubPlan 3

-> LockRows (never executed)

-> Index Scan using employees_pkey on employees pk2 (never executed)

Index Cond: (id = pk1.id)

Filter: ((COALESCE(lower(valid_at), '-infinity'::date) <= COALESCE(upper(pk1.valid_at), 'infinity'::date)) AND (COALESCE(upper(pk1.valid_at), 'infinity'::date) < COALESCE(upper(valid_at), 'infinity'::date)))In this example, the beginning of the referencing range wasn’t covered, so Postgres never had to check the rest. Essentially the query is a AND b AND c, so Postgres can short-circuit the evaluation as soon as it finds a to be false. Using range_agg or lag doesn’t allow this, because an aggregate/window function has to run to completion to get a result.

As confirmation (a bit gratuitous to be honest), I ran the EXISTS benchmark with this bpftrace script:

// Count how many exec nodes per query were required,

// and print a histogram of how often each count happens.

// Run this for each FK implementation separately.

// My hypothesis is that the EXISTS implementation calls ExecProcNode far fewer times,

// but only if the FK is invalid.

u:/home/paul/local/bench-*/bin/postgres:standard_ExecutorStart {

@nodes[tid] = 0

}

u:/home/paul/local/bench-*/bin/postgres:ExecProcNode {

@nodes[tid] += 1

}

u:/home/paul/local/bench-*/bin/postgres:standard_ExecutorEnd {

@calls = hist(@nodes[tid]);

delete(@nodes[tid]);

}For EXISTS I got this histogram when there were no invalid references:

@calls:

[0] 6 | |

[1] 0 | |

[2, 4) 0 | |

[4, 8) 228851 |@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@|

[8, 16) 1 | |

[16, 32) 1 | |

[32, 64) 2 | |

[64, 128) 2 | |

[128, 256) 2 | |

[256, 512) 5 | |But with 50%+ errors I got this:

@calls:

[0] 6 | |

[1] 0 | |

[2, 4) 218294 |@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@|

[4, 8) 183438 |@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@ |

[8, 16) 231 | |

[16, 32) 1 | |

[32, 64) 2 | |

[64, 128) 2 | |

[128, 256) 2 | |

[256, 512) 5 | |So more than half the time, Postgres ran the query with half the steps (maybe one-fourth).

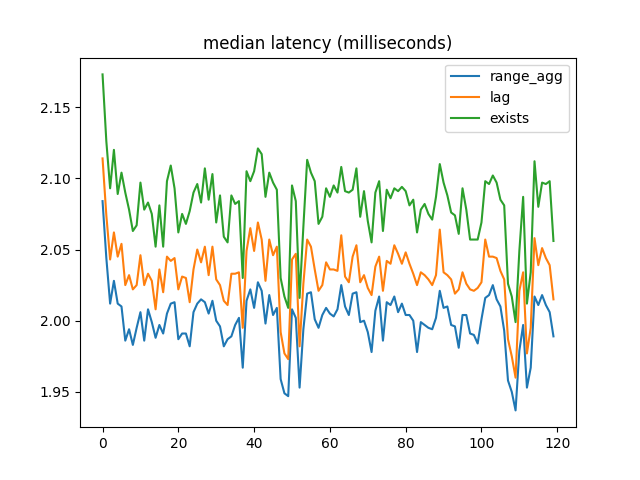

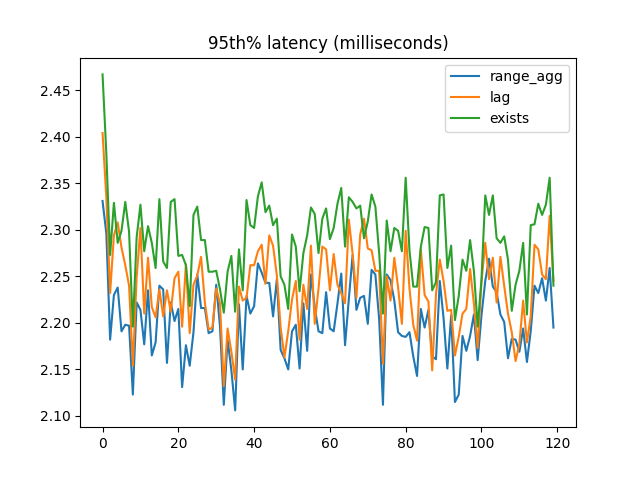

After tuning the random numbers to bring errors closer to 1%, I got results more like the original ones. Mean latency:

Median latency:

95th percentile latency:

Conclusions

All foreign key implementations have expected query plans. We use indexes where we should, etc.

When most foreign key references are valid, range_agg outperforms the other two implementations by a small but consistent amount. But with a large number of invalid references, EXISTS is a lot faster.

In most applications I’ve seen, foreign keys are used as guardrails, and we expect 99% of checks to pass (or more really). When using ON DELETE CASCADE the situation is different, but these benchmarks are for NO ACTION or RESTRICT, and I don’t think CASCADE affords the EXISTS implementation the same shortcuts. So it seems right to optimize for the mostly-valid case, not the more-than-half-invalid case.

These results are good news, because range_agg is also more general: it supports multiranges and custom types.

Further Work

There are more things I’d like to benchmark (and if I do I’ll update this post):

- Replace separate start/end comparisons with range operators in the

EXISTSandlagimplementations. I just need to make sure they still pass all the tests when I do that. - Correct the

Infinity-vs-null discrepancy. - Monitor the CPU and disk activity under each implementation and compare the results. I don’t think I’ll see any difference in disk, but CPU might be interesting.

- Compare different scale factors (i.e. starting number of employees/positions).

- Compare implementations when an employee is chopped into many small records, and a single position spans all of them. If

range_aggis O(n2) that should be worse than the sorting in the other options. - Compare temporal foreign keys to non-temporal foreign keys (based on B-tree indexes, not GiST). I’m not sure yet how to do this in a meaningful way. Of course b-trees are faster in general, but how do I use them to achieve the same primary key and foreign key constraints? Maybe the best way is to create the tables without constraints, give them only b-tree indexes, and run the direct

SELECTstatements, not the DML.

Benchbase Documentation

Benchbase is a framework from Carnegie Mellon for benchmarking databases. It comes with support for about 20 benchmarks and about as many DBMSes.

Benchbase started life as OLTPBench as was introduced in an academic paper from 2014.

Using Benchbase the last month, I found the documentation to be pretty shallow, so this is my effort to improve things. A lot of this material was covered in my pdxpug talk last week.

Running

Benchbase is written in Java and uses Maven to build and use.

Following their README, first you build a tarball for your DBMS like this:

./mvnw clean package -P postgresThen you expand the tarball and run a benchmark like this:

cd target

tar xvzf benchbase-postgres.tgz

cd benchbase-postgres

java -jar benchbase.jar -b tpcc -c config/postgres/sample_tpcc_config.xml --create=true --load=true --execute=trueThe -b option says which benchmark you want to run.

The -c option points to a config file (covered below).

The --create option doesn’t run CREATE DATABASE, but creates the schema for the benchmark.

The --load option fills the schema with its starting data. The time for this is not included in the benchmark results.

The --execute option actually runs the benchmark. I often ran ‑‑create=true ‑‑load=true ‑‑execute=false to populate a database named e.g. benchbase_template, then createdb -T benchbase_template benchbase to make a quick copy, then ‑‑create=false ‑‑load=false ‑‑execute=true to run the benchmark. That helps iteration time a lot when you have a big load. But for higher-quality results you should do it all in one go, after running initdb, as Melanie Plageman points out in one of her talks. (Sorry, I haven’t been able to find the reference again, but if I do I’ll point a link here.)

If you are writing Java code for your own benchmark, then this one-liner is a lot faster than all that tarball stuff:

./mvnw clean compile exec:java -P postgres -Dexec.args="-b tpcc -c config/postgres/sample_tpcc_config.xml --create=true --load=true --execute=true"Of course you can skip the clean and compile if you like.

Unfortunately the exec:java target has been broken since 2023, but I submitted a pull request.

Configuration

The benchmark behavior is controlled by the XML config file. The most complete docs are in the original OLTPBench repo’s Github wiki, although if you read the paper you’ll learn many other things you can control with this file. You can also look at a sample config file for your benchmark + database.

The file begins with connection details like this:

<type>POSTGRES</type>

<driver>org.postgresql.Driver</driver>

<url>jdbc:postgresql://localhost:5432/benchbase?sslmode=disable&ApplicationName=tpcc&reWriteBatchedInserts=true</url>

<username>admin</username>

<password>password</password>The <isolation> element controls the transaction isolation level:

<isolation>TRANSACTION_SERIALIZABLE</isolation>You can ask to reconnect after a connection failure:

<reconnectOnConnectionFailure>true</reconnectOnConnectionFailure>I haven’t investigated exactly how that is used.

You can also open a new connection for every transaction:

<newConnectionPerTxn>true</newConnectionPerTxn>By default that is false, but you may want to make it true if you are focusing on your database’s connection overhead.

Loading

Here are some elements that apply to the loading step (not the actual benchmark run):

<scalefactor>1</scalefactor>

<batchsize>128</batchsize>Each benchmark interprets scalefactor in its own way. For TPC-C this is the number of warehouses. For Twitter you get 500 users and 20,000 tweets, multiplied by the scalefactor.

Then batchsize just tells the loader how to combine insert statements, for a quicker load.

Execution

You also list all the “procedures” the benchmark is capable of (or just the ones you care about):

<transactiontypes>

<transactiontype>

<name>NewOrder</name>

</transactiontype>

<transactiontype>

<name>Payment</name>

</transactiontype>

<transactiontype>

<name>OrderStatus</name>

</transactiontype>

<transactiontype>

<name>Delivery</name>

</transactiontype>

<transactiontype>

<name>StockLevel</name>

</transactiontype>

</transactiontypes>Each procedure is defined in a Java file.

Besides <name>, you can also include <preExecutionWait> and <postExecutionWait> to give a delay in milliseconds before/after running the transaction. So this is one way to add “think time”.

There is also a concept of “supplemental” procedures, but that is not controlled by the config file. Only the SEATS and AuctionMark benchmarks use it. From quickly scanning the code, I think it lets a benchmark define procedures without depending on the user to list them. They won’t be added to the normal transaction queue, but the benchmark can run them elsewhere as needed. For example SEATS uses its supplemental procedure to find out which airports/flights/etc were added in the load step, so it can use them.

The top-level <terminals> element controls the concurrency. This is how many simultaneous connections you want:

<terminals>1</terminals>But the real behavior comes from the <works> element. This contains <work> child elements, each one a “phase” of your benchmark. For example:

<works>

<work>

<time>60</time>

<rate>10000</rate>

<weights>45,43,4,4,4</weights>

</work>

</works>Here was have one phase lasting 60 seconds.

The <weights> refer to the <transactiontypes> above. Each weight is a percentage giving the share of that procedure in the total transactions. They must add to 100%.

The <rate> gives the targeted transactions per second (per terminal). Mostly this is a way to slow things down, not to speed things up: it is another way to include “think time” in between transactions. If your run doesn’t achieve this rate, it’s not an error.

Each phase can override the top-level concurrency with <active_terminals>5</active_terminals>.

Also you can let the phase start gradually with <work arrival="poisson">. The OLTP-Bench paper demonstrates this technique.

In addition a benchmark may understand other XML elements. For example Twitter lets you give <tracefile> and <tracefile2>, and the benchmark will use those to read tweet ids and user ids (respectively), which it will use as inputs for its transactions (but not every transaction type uses both).

Code

Writing

Talks

- Temporal Benchmark

- Inlining Postgres Functions

- Temporal Databases 2024

- Papers We Love: Temporal Alignment

- Benchbase for Postgres Temporal Foreign Keys

- Hacking Postgres: the Exec Phase

- Progress Adding SQL:2011 Valid Time to Postgres

- Temporal Databases: Theory and Postgres

- A Rails Timezone Strategy

- Rails and SQL

- Async Programming in iOS

- Errors in Node.js

- Wharton Web Intro

- PennApps jQuery Intro