Postgres Logical Replication

Logical decoding is different from logical replication. Logical replication is built on logical decoding.

Logical Decoding

Logical decoding exports the changes happening in your database. They are streamed through a replication slot. The slot keeps track of how far you’ve read, so that it doesn’t skip or repeat messages. (Btw how is it not “at least once” delivery?) Basically you are getting a stream of WAL records. But whereas a physical replication slot gives you the exact binary of the WAL as it gets written, a logical replication slot includes an output plugin that encodes the WAL in a (hopefully) more accessible way.

You can write output plugins yourself by implementing various callbacks to handle different kinds of database activity. The only essential callbacks are LogicalDecodeBeginCB, LogicalDecodeChangeCB, and LogicalDecodeCommitCB, but there are many optional ones. Some of those let you implement two-phase commit (see e.g. Kleppmann 352–359).

You can also add an output writer, but I’m not sure how it differs from a plugin. Somehow it only requires implementing three callbacks instead of many. I don’t see any way to “install” your writer or attach it to a slot. I’ll come back to this some other day.

The most popular output plugin is wal2json. When you read from the replication slot, you get each WAL record as a JSON object. Postgres also has a built-in test_decoding output plugin (the default), which gives you the details as plain text.

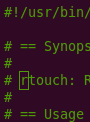

Postgres comes with a tool called pg_recvlogical you can use to read from a replication slot. It can also create and drop slots. For example:

pg_recvlogical --create-slot --if-not-exists --slot s --dbname paul

pg_recvlogical --start --slot s --dbname paul -f -If you let that run then go into psql and issue some inserts/updates/deletes, you will see the messages getting sent through the slot.

paul=# create table t (a text primary key);

CREATE TABLE

paul=# insert into t values ('a'), ('b');

INSERT 0 2

paul=# update t set a = 'aa' where a = 'a';

UPDATE 1

paul=# delete from t where a = 'b';

DELETE 1Our pg_recvlogical command prints:

BEGIN 19715

COMMIT 19715

BEGIN 19716

table public.t: INSERT: a[text]:'a'

table public.t: INSERT: a[text]:'b'

COMMIT 19716

BEGIN 19717

table public.t: UPDATE: old-key: a[text]:'a' new-tuple: a[text]:'aa'

COMMIT 19717

BEGIN 19718

table public.t: DELETE: a[text]:'b'

COMMIT 19718If you want JSON instead, you can create the slot with -P wal2json (after installing it).

The tricky part here is identifying which row changed for updates and deletes. Because t had a primary key, Postgres used it, because it should uniquely identify a row on the other end. But you can do other things too, based on the table’s REPLICA IDENTITY. You can set this to DEFAULT (i.e. using the primary key if present), NOTHING, USING INDEX <index>, or FULL.

NOTHING is the same as DEFAULT with no primary key: there is no identifying information available.

USING INDEX takes a unique index with no nullable parts, and uses that.

FULL uses all the attributes of the row. It works for any table, but it’s less performant.

For more details on REPLICA IDENTITY you can read my article here.

Let’s drop the table, create it without a primary key, and run the same commands again:

paul=# create table t (a text);

CREATE TABLE

paul=# insert into t values ('a'), ('b');

INSERT 0 2

paul=# update t set a = 'aa' where a = 'a';

UPDATE 1

paul=# delete from t where a = 'b';

DELETE 1Now the logical decoding output doesn’t have any old-row identifiers:

BEGIN 19720

COMMIT 19720

BEGIN 19721

table public.t: INSERT: a[text]:'a'

table public.t: INSERT: a[text]:'b'

COMMIT 19721

BEGIN 19722

table public.t: UPDATE: a[text]:'aa'

COMMIT 19722

BEGIN 19723

table public.t: DELETE: (no-tuple-data)

COMMIT 19723Missing a REPLICA IDENTITY is no problem for logical decoding. For logical replication, it will raise an error when you try to update or delete.

Clarification of “Streaming”

In the Postgres docs, the word “streaming” can be misleading. Often it means physical replication (originally the only kind of streaming replication we had), in contrast to logical—but not always.

Sometimes “streaming replication” constrasts with “log shipping”. It means the standby opens a connection to the primary and constantly pulls data via the streaming replication protocol. In log shipping, the primary has an archive_command to copy WAL files where the standby can read them. (In fact you usually combine these methods.) That said, this page is at the same time equating streaming replication with physical. You could set up a standby with logical replication, but that’s not what it’s describing.

In another place, “streaming” means the streaming replication protocol in contrast to SQL commands.

Perhaps most often, “streaming replication” means “physical replication” in contrast to logical. For instance this note about logical replication slots should perhaps s/streaming/physical/:

PostgreSQL also has streaming replication slots (see Section 26.2.5), but they are used somewhat differently there.

Likewise the three opening paragraphs of the page for “Replication” configuration seem to constrast streaming replication to logical replication.

So just watch out when you’re reading! More precise language would contast “physical” with “logical”, and be clear that both are “streaming.” I’m hopeful from that mailing list thread so far that we might start moving the docs in that direction.

Logical Replication

Logical replication is built on top of logical decoding and replication slots. But instead of working at such a low level, it replicates changes from one Postgres table to another (usually in another cluster). The publisher side uses a built-in output plugin named pgoutput. To set it up, you use CREATE PUBLICATION on the sender and CREATE SUBSCRIPTION on the receiver. Like this:

sender=# CREATE PUBLICATION p FOR TABLE t1, t2;

. . .

receiver=# CREATE SUBSCRIPTION s CONNECTION 'host=yonder dbname=thisnthat' PUBLICATION p;But first you’ll need to have tables in the receiving database named t1 and t2, with at least enough columns to match their sources.

There are lots of options. For the publication, you can create it FOR ALL TABLES or FOR TABLES IN SCHEMA s1, s2. You can publish a subset of event types (insert, update, delete, truncate). You can publish a subset of each table’s columns. You can do special things with partitions. You can have a WHERE clause to publish only certain rows (assuming the publication is for a single table).

The subscription side has lots of options too. See the docs above for details.

Once you have a publication and subscription created, you will see an entry in the sender’s pg_stat_replication:

sender=# select * from pg_stat_replication \gx

-[ RECORD 1 ]----+------------------------------

pid | 44344

usesysid | 10

usename | paul

application_name | s

client_addr | NULL

client_hostname | NULL

client_port | -1

backend_start | 2024-10-13 11:37:18.361684-05

backend_xmin | NULL

state | streaming

sent_lsn | 0/11DE0AB8

write_lsn | 0/11DE0AB8

flush_lsn | 0/11DE0AB8

replay_lsn | 0/11DE0AB8

write_lag | NULL

flush_lag | NULL

replay_lag | NULL

sync_priority | 0

sync_state | async

reply_time | 2024-10-13 11:37:48.419264-05Unlike physical replication, logical replication does not transfer DDL changes.

Synchronous Replication

Both logical decoding and logical replication can be synchronous. This means we can configure the primary to report successful commits only after hearing from the standby(s) that they were successful. (You can also do this with physical replication.) To enable this, first set synchronous_standby_names to a comma-separated list of names you will wait for. Then set synchronous_commit to remote_apply, on, remote_write, or local. (The default is on.)

remote_apply is the most thorough: the standbys must flush the commit record to disk and apply it (so that other connections can see it). on also requires flushing to disk (e.g. WAL), but it needn’t be applied. remote_write requires standbys to perform the write to disk, but they need not have confirmation from the OS that it’s been flushed. And local will not wait for standbys. (This is sort of pointless if you put things in synchronous_standby_names, but maybe it lets you disable synchronous replication temporarily without erasing that other setting.)

The names to put in synchronous_standby_names should match the connection’s application_name, one of the parameters available when opening any connection. You can set it in the connection string if you like. Otherwise it defaults to the subscription name. (For physical replication, standbys omitting this from their connection string default to their cluster_name or failing that walsender.) You can see the application_name in pg_stat_replication above matches the subscription name.

If you have more than one standby listed as synchronous, the primary will wait on confirmation from all of them (by default: you can do more nuanced things with synchronous_standby_names if you like).

If you are using synchronous logical replication, this is an important warning:

A synchronous replica receiving changes via logical decoding will work in the scope of a single database. Since, in contrast to that,

synchronous_standby_namescurrently is server wide, this means this technique will not work properly if more than one database is actively used.

According to commit 3cb828dbe2 the problem is a potential deadlock from locking catalog tables. The commit and the docs have the specific lock sequences that are dangerous, and they don’t seem hard to avoid.

My first impression on reading the note was a different scenario though. Consider: you have two databases, both replicated with logical replication, but each to a different standby. You make a change in one database, and now you’re waiting for both standbys to confirm. But one never got the change so will never answer.

But in fact Postgres is smarter than that and only waits for the standbys that are relevant. I tested these scenarios:

- One database in the sender cluster replicates to a database in the receiver cluster, and I update a table in a different database in the sender cluster. (I am “actively using” it.)

- Two databases in the sender cluster each replicate to the same database in the receiver cluster.

- Two databases in the sender cluster each replicate to databases in different clusters.

Logical Replication combined with Physical Replication Failover

If your primary cluster has both a physical replication standby and logical replication subscribers, and then you fail over to the standby, you need a way to point the logical subscribers at the newly-promoted cluster (i.e. the former standby). You want to do that without losing data.

If (before the failure) you set sync_replication_slots to true on the physical standby, it will maintain the same slots the primary has, including keeping track of how far each has been read. That way when your logical subscribers connect to the new primary, they can resume where they left off.

It is also a good idea to use synchronized_standby_slots, to make sure the logical subscribers don’t get ahead of the physical standby.

Code

Writing

Talks

- Temporal Benchmark

- Inlining Postgres Functions

- Temporal Databases 2024

- Papers We Love: Temporal Alignment

- Benchbase for Postgres Temporal Foreign Keys

- Hacking Postgres: the Exec Phase

- Progress Adding SQL:2011 Valid Time to Postgres

- Temporal Databases: Theory and Postgres

- A Rails Timezone Strategy

- Rails and SQL

- Async Programming in iOS

- Errors in Node.js

- Wharton Web Intro

- PennApps jQuery Intro