k3s Behind Nginx

Here on illuminatedcomputing.com I’ve got a bunch of sites served by nginx, but I’d like to run a little k3s cluster as well. The main benefit would be isolation. That is always helpful, but it especially matters for staging sites for some customers who don’t update very often.

Instead of migrating everything all at once, I want to keep my host nginx but let it reverse proxy to k3s for sites running there. Then I will block direct traffic to k3s, so that there is only one way to get there. I realize this is not really a “correct” way to do k8s, but for a tiny setup like mine it makes sense. Maybe I should have just bought a separate box for k3s, but I find pushing tools a bit like this is a good way to learn how they really work, and that’s what happened here.

It was harder than I thought. I found one or two people online seeking to do the same thing, but there were no good answers. I had to figure it out on my own, and now maybe this post will help someone else.

The first step was to run k3s on other ports. I’m using the ingress-nginx ingress controller via a Helm chart. In my values.yaml I have it bind to 8080 and 8443 instead:

ingress-nginx:

controller:

enableHttp: true

enableHttps: true

service:

ports:

http: 8080

https: 8443Then I can see the Service is using those ports:

paul@tal:~/src/illuminatedcomputing/k8s$ k get services -A

NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

ingress ingress-ingress-nginx-controller LoadBalancer 10.43.91.109 107.150.34.82 8080:31333/TCP,8443:30702/TCP 7d20h

...Setting up nginx to reverse proxy was also no problem. For example here is a private docker registry I’m running:

server {

listen 443 ssl;

server_name docker.illuminatedcomputing.com;

ssl_certificate ssl/docker.illuminatedcomputing.com.crt;

ssl_certificate_key ssl/docker.illuminatedcomputing.com.key;

location / {

proxy_pass https://127.0.0.1:8443;

proxy_set_header Host "docker.illuminatedcomputing.com";

}

}

server {

listen 80;

server_name docker.illuminatedcomputing.com;

location / {

proxy_pass http://127.0.0.1:8080;

proxy_set_header Host "docker.illuminatedcomputing.com";

}

}The only tricky part is the ssl cert. I already had the cluster built to get certs from LetsEncrypt with cert-manager. So I have a little cron script that pulls out the k8s Secret and puts it where the host nginx can find it:

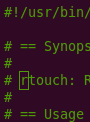

#!/bin/bash

export KUBECONFIG=/etc/rancher/k3s/k3s.yaml

# docker.illuminatedcomputing.com

kubectl get secret -n docker-registry docker-registry-tls -o json | jq -r '.data["tls.crt"] | @base64d' > /etc/nginx/ssl/docker.illuminatedcomputing.com.crt

kubectl get secret -n docker-registry docker-registry-tls -o json | jq -r '.data["tls.key"] | @base64d' > /etc/nginx/ssl/docker.illuminatedcomputing.com.keyProbably it would be easier to run certbot on the host and push the cert into k8s (or just terminate TLS), but using cert-manager is what I’d do for a customer, and I’m hopeful that eventually I’ll drop the reverse proxy altogether.

So at this point connecting works:

curl -v https://docker.illuminatedcomputing.com/v2/_catalog(Of course it will be a 401 without the credentials, but you are still getting through to the service.)

The problem is that this works too:

curl -v https://docker.illuminatedcomputing.com:8443/v2/_catalogSo how can I block that port from everything but the host nginx? I tried making the controller bind to just 127.0.0.1, e.g. with this config:

ingress-nginx:

controller:

config:

bind-address: "127.0.0.1"

enableHttp: true

enableHttps: true

service:

externalIPs:

- "127.0.0.1"

ports:

http: 8080

https: 8443The bind-address line adds to a ConfigMap used to generate the nginx.conf. It doesn’t work though. The 127.0.0.1 is from the perspective of the controller pod, not the host 127.0.0.1.

Using externalIPs (with or without bind-address) also fails. When I add those two lines k3s gives this error:

Error: UPGRADE FAILED: cannot patch "ingress-ingress-nginx-controller" with kind Service: Service "ingress-ingress-nginx-controller" is invalid: spec.externalIPs[0]: Invalid value: "127.0.0.1": may not be in the loopback range (127.0.0.0/8, ::1/128)So I gave up on that approach.

But what about using iptables to block 8443 and 8080 from the outside? That’s probably simpler anyway—although k3s adds a big pile of its own iptables rules, and diving into that was a bit intimidating.

The first thing I tried was putting a rule at the top of the INPUT chain. I tried all these:

iptables -I INPUT -p tcp \! -s 127.0.0.1 --dport 8443 -j DROP

iptables -I INPUT -p tcp \! -i lo --dport 8443 -j DROP

iptables -I INPUT -p tcp -i enp2s0 --dport 8443 -j DROP But none of those worked. I could still get through.

At this point a friend asked ChatGPT for advice, but it wasn’t very helpful. It told me

Instead of having the ingress controller listen on an external IP or trying to make it listen only on 127.0.0.1, configure your host’s nginx to proxy_pass to your k3s services.

Yes, I had explained I was doing that. Also:

You could create a network policy that only allows traffic to the ingress-nginx pods from within the cluster itself.

But that will block the reverse proxy too.

So the cyber Pythia was not coming through for me. I was going to have to figure it out on my own. That meant coming to grips with all the rules k3s was installing.

I started with adding some logging, for example:

iptables -I INPUT -p tcp -d 107.150.34.82 -j LOG --log-prefix '[PJPJPJ] ' Tailing /var/log/syslog, I could see messages for 443 requests, but nothing for 8443!

So I took a closer look at the nat table (which is processed before the filter table), and I found some relevant rules:

-A PREROUTING -m comment --comment "kubernetes service portals" -j KUBE-SERVICES

-A KUBE-EXT-2ZARXDYICCJUF4UZ -m comment --comment "masquerade traffic for ingress/ingress-ingress-nginx-controller:https external destinations" -j KUBE-MARK-MASQ

-A KUBE-EXT-2ZARXDYICCJUF4UZ -j KUBE-SVC-2ZARXDYICCJUF4UZ

-A KUBE-EXT-DBDMS67BVV2C2LTP -m comment --comment "masquerade traffic for ingress/ingress-ingress-nginx-controller:http external destinations" -j KUBE-MARK-MASQ

-A KUBE-EXT-DBDMS67BVV2C2LTP -j KUBE-SVC-DBDMS67BVV2C2LTP

-A KUBE-SEP-RQCBIXXO7M53R2WC -s 10.42.0.42/32 -m comment --comment "ingress/ingress-ingress-nginx-controller:https" -j KUBE-MARK-MASQ

-A KUBE-SEP-RQCBIXXO7M53R2WC -p tcp -m comment --comment "ingress/ingress-ingress-nginx-controller:https" -m tcp -j DNAT --to-destination 10.42.0.42:443

-A KUBE-SEP-TXLMBMTNQTOOKDI3 -s 10.42.0.42/32 -m comment --comment "ingress/ingress-ingress-nginx-controller:http" -j KUBE-MARK-MASQ

-A KUBE-SEP-TXLMBMTNQTOOKDI3 -p tcp -m comment --comment "ingress/ingress-ingress-nginx-controller:http" -m tcp -j DNAT --to-destination 10.42.0.42:80

-A KUBE-SERVICES -d 107.150.34.82/32 -p tcp -m comment --comment "ingress/ingress-ingress-nginx-controller:https loadbalancer IP" -m tcp --dport 8443 -j KUBE-EXT-2ZARXDYICCJUF4UZ

-A KUBE-SERVICES -d 107.150.34.82/32 -p tcp -m comment --comment "ingress/ingress-ingress-nginx-controller:http loadbalancer IP" -m tcp --dport 8080 -j KUBE-EXT-DBDMS67BVV2C2LTP

-A KUBE-SVC-2ZARXDYICCJUF4UZ ! -s 10.42.0.0/16 -d 10.43.91.109/32 -p tcp -m comment --comment "ingress/ingress-ingress-nginx-controller:https cluster IP" -m tcp --dport 8443 -j KUBE-MARK-MASQ

-A KUBE-SVC-2ZARXDYICCJUF4UZ -m comment --comment "ingress/ingress-ingress-nginx-controller:https -> 10.42.0.42:443" -j KUBE-SEP-RQCBIXXO7M53R2WC

-A KUBE-SVC-DBDMS67BVV2C2LTP ! -s 10.42.0.0/16 -d 10.43.91.109/32 -p tcp -m comment --comment "ingress/ingress-ingress-nginx-controller:http cluster IP" -m tcp --dport 8080 -j KUBE-MARK-MASQ

-A KUBE-SVC-DBDMS67BVV2C2LTP -m comment --comment "ingress/ingress-ingress-nginx-controller:http -> 10.42.0.42:80" -j KUBE-SEP-TXLMBMTNQTOOKDI3If you follow how that bounces around, it eventually gets rerouted to 10.42.0.42, either :443 or :80. So that’s why a connection to 8443 never hits the INPUT chain.

So the solution was to drop the traffic in the nat table instead:

root@www:~# iptables -I PREROUTING -t nat -p tcp -i enp2s0 --dport 8443 -j DROP

iptables v1.8.4 (legacy):

The "nat" table is not intended for filtering, the use of DROP is therefore inhibited.Oops, just kidding!

But instead I can just tell 8080 & 8443 to skip all the k3s rewriting:

iptables -I PREROUTING -t nat -p tcp -i enp2s0 --dport 8443 -j RETURN

iptables -I PREROUTING -t nat -p tcp -i enp2s0 --dport 8080 -j RETURNNow those do show up on the INPUT chain, but I don’t even need to DROP them there. There is nothing actually listening on those ports. The controller is still binding to 443 and 80, and k3s is using iptables trickery to reroute connections to those ports. So those two lines above are sufficient, and someone connecting directly gets a Connection refused.

That’s it! I hope this is helpful or you at least enjoyed the story.

blog comments powered by Disqus Next: Cozy ToesCode